Artificial Intelligence’s Use and Rapid Growth Highlight Its Possibilities and Perils

The rise of artificial intelligence has created growing excitement and much debate about its potential to revolutionize entire industries. At its best, AI could improve medical diagnosis, identify potential national security threats more quickly, and solve crimes. But there are also significant concerns—in areas including education, intellectual property, and privacy.

Today’s WatchBlog post looks at our recent work on how Generative AI systems (for example, ChatGPT and Bard) and other forms of AI have the potential to provide new capabilities, but require responsible oversight.

Image

The promise and perils of current AI use

Our recent work has looked at three major areas of AI advancement.

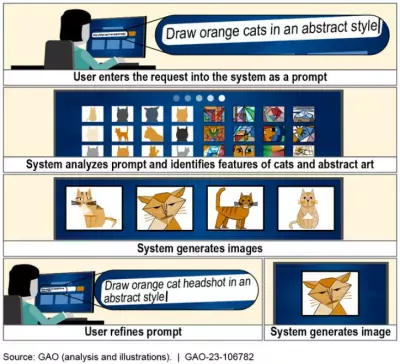

Generative AI systems can create text (apps like ChatGPT and Bard, for example), images, audio, video, and other content when prompted by a user. These growing capabilities could be used in a variety of fields such as education, government, law, and entertainment. As of early 2023, some emerging generative AI systems had reached more than 100 million users. Advanced chatbots, virtual assistants, and language translation tools are examples of generative AI systems in widespread use. As news headlines indicate, this technology continues to gain global attention for its benefits. But there are concerns too, such as how it could be used to replicate work from authors and artists, generate code for more effective cyberattacks, and even help produce new chemical warfare compounds, among other things. Our recent Spotlight on Generative AI takes a deeper look at how this technology works.

Machine learning is a second application of AI growing in use. This technology is being used in fields that require advanced imagery analysis, from medical diagnostics to military intelligence. In a report last year, we looked at how machine learning was used to assist the medical diagnostic process. It can be used to identify hidden or complex patterns in data, detect diseases earlier and improve treatments. We found that benefits include more consistent analysis of medical data, and increased access to care, particularly for underserved populations. However, our work looked at limitations and bias in data used to develop AI tools that can reduce their safety and effectiveness and contribute to inequalities for certain patient populations.

Facial recognition is another type of AI technology that has shown both promises and perils in its use. Law enforcement—federal, as well as state and local—have used facial recognition technology to support criminal investigations and video surveillance. It is also used at ports of entry to match travelers to their passports. While this technology can be used to identify potential criminals more quickly, or those who may not have been identified without it, our work has also found some concerns with its use. Despite improvements, inaccuracies and bias in some facial recognition systems could result in more frequent misidentification for certain demographics. There are also concerns about whether the technology violates individuals’ personal privacy.

Image

Ensuring accountability and mitigating the risks of AI use

As AI use continues its rapid expansion, how can we mitigate the risks and ensure these systems are working appropriately for all?

Appropriate oversight will be critical to ensuring AI technologies remain effective, and keep our data safeguarded. We developed an AI Accountability Framework to help Congress address the complexities, risks, and societal consequences of emerging AI technologies. Our framework lays out key practices to help ensure accountability and responsible AI use by federal agencies and other entities involved in the design, development, deployment, and continuous monitoring of AI systems. It is built around four principles—governance, data, performance, and monitoring—which provide structures and processes to manage, operate, and oversee the implementation of AI systems.

AI technologies have enormous potential for good, but much of their power comes from their ability to outperform human abilities and comprehension. From commercial products to strategic competition among world powers, AI is poised to have a dramatic influence on both daily life and global events. This makes accountability critical to its application, and the framework can be employed to ensure that humans run the system—not the other way around.

- Comments on GAO’s WatchBlog? Contact blog@gao.gov.