Deconstructing Deepfakes—How do they work and what are the risks?

Last month, Microsoft introduced a new deepfake detection tool. Weeks ago, Intel launched another. As more and more companies follow suit and more concerns arise about the use of this technology, we take a look in today’s WatchBlog at how this technology works and the policy questions it raises.

What is a deepfake?

A deepfake is a video, photo, or audio recording that seems real but has been manipulated using artificial intelligence (AI). The underlying technology can replace faces, manipulate facial expressions, synthesize faces, and synthesize speech. These tools are used most often to depict people saying or doing something they never said or did.

How do deepfakes work?

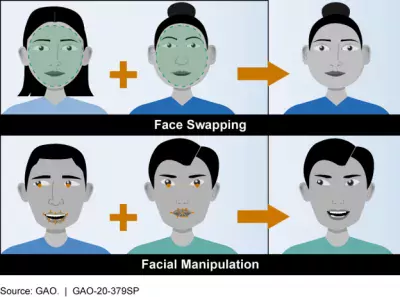

Deepfake videos commonly swap faces or manipulate facial expressions. The image below illustrates how this is done. In face swapping, the face on the left is placed on another person’s body. In facial manipulation, the expressions of the face on the left are imitated by the face on the right.

Face Swapping and Facial Manipulation

Image

Deepfakes rely on artificial neural networks, which are computer systems that recognize patterns in data. Developing a deepfake photo or video typically involves feeding hundreds or thousands of images into the artificial neural network, “training” it to identify and reconstruct patterns—usually faces.

How can you spot a deepfake?

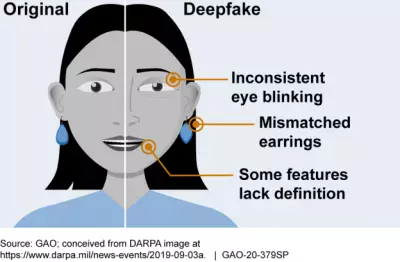

The figure below illustrates some of the ways you can identify a deepfake from the real thing. To learn more about how to identify a deepfake, and to learn about the underlying technology used, check out our recent Spotlight on this technology.

Image

What are the benefits of these tools?

Voices and likenesses developed using deepfake technology can be used in movies to achieve a creative effect or maintain a cohesive story when the entertainers themselves are not available. For example, in the latest Star Wars movies, this technology was used to replace characters who had died or to show characters as they appeared in their youth. Retailers have also used this technology to allow customers to try on clothing virtually.

What risks do they pose?

In spite of such benign and legitimate applications like films and commerce, deepfakes are more commonly used for exploitation. Some studies have shown that much of deepfake content online is pornographic, and deepfake pornography disproportionately victimizes women.

There is also concern about potential growth in the use of deepfakes for disinformation. Deepfakes could be used to influence elections or incite civil unrest, or as a weapon of psychological warfare. They could also lead to disregard of legitimate evidence of wrongdoing and, more generally, undermine public trust.

What can be done to protect people?

As discussed above, researchers and internet companies, such as Microsoft and Intel, have experimented with several methods to detect deepfakes. These methods typically use AI to analyze videos for digital artifacts or details that deepfakes fail to imitate realistically, such as blinking or facial tics. But even with these interventions by tech companies, there are a number of policy questions about deepfakes that still need to be answered. For example:

- What can be done to educate the public about deepfakes to protect them and help them identify real from fake?

- What rights do individuals have to privacy when it comes to the use of deepfake technology?

- What First Amendment protections do creators of deepfake videos, photos, and more have?

Deepfakes are powerful tools that can be used for exploitation and disinformation. With advances making them more difficult to detect, these technologies require a deeper look.

- Comments on GAO’s WatchBlog? Contact blog@gao.gov

GAO Contacts

Related Products

GAO's mission is to provide Congress with fact-based, nonpartisan information that can help improve federal government performance and ensure accountability for the benefit of the American people. GAO launched its WatchBlog in January, 2014, as part of its continuing effort to reach its audiences—Congress and the American people—where they are currently looking for information.

The blog format allows GAO to provide a little more context about its work than it can offer on its other social media platforms. Posts will tie GAO work to current events and the news; show how GAO’s work is affecting agencies or legislation; highlight reports, testimonies, and issue areas where GAO does work; and provide information about GAO itself, among other things.

Please send any feedback on GAO's WatchBlog to blog@gao.gov.